- Overview of a broad field of specialized technology

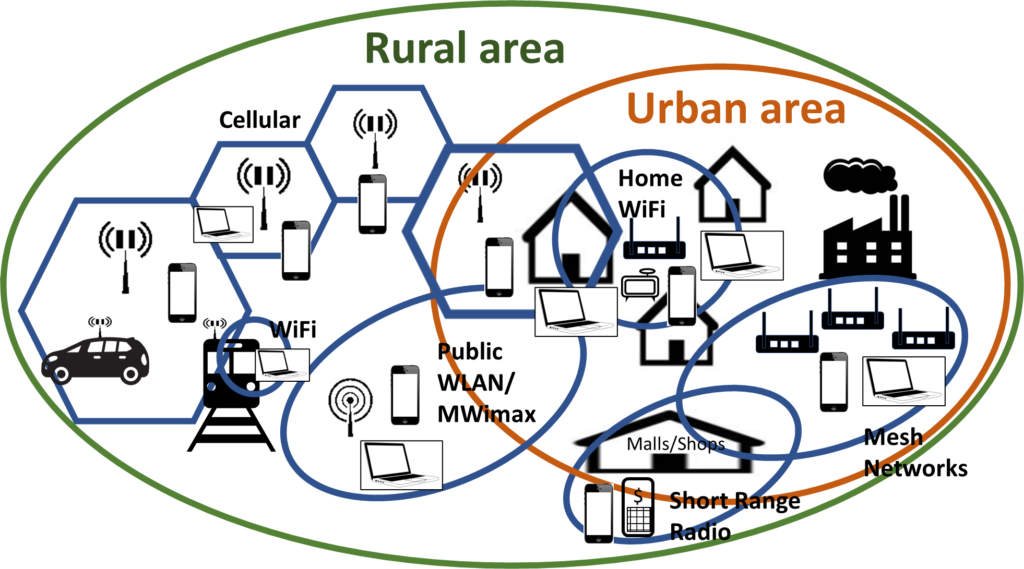

Wireless and mobile connectivity is one of the most quickly growing access methods and is used in almost every industry to gain independence from location and enable freedom of movement. Many different radio technologies and standards are available on the market, but they are usually only designed for, applicable with, and perform well in particular use cases

In the previous article, we outlined how different types of radio access could be used for IoT applications and what the associated advantages and disadvantages were. In this article, we will provide definitions and elaborations on these radio technologies.

- Low-power, long-range radio technology

(LPWAN low power wide area networks)

LPWAN (low-power wide area networks) transport data, status and information from connected low power and autonomous sensors and devices to the decision-making application for storage in the data backend.

Overview LPWAN

Narrow-band IoT (NB-IoT)

LTE Cat NB1 is a derivation of the LTE standard which is also specified in 3GPP release 13. It is designed for IoT applications that are even more constrained than those using eMTC. This technology is based on narrow-band communications and uses a bandwidth of 180 kHz. As a result, the data rate is greatly reduced (around 250 kbps down-link and 20 kbps up-link), which makes FotA updates hard to achieve using NB-IoT. On the bright side, NB-IoT consumes less energy and benefits from a greater range than eMTC.

Enhanced Machine-Type Com. (eMTC, LTE-M)

Long Term Evolution (4G) is a standard from the 3GPP. LTE Cat M1, which is known as either LTE-M or eMTC, is derived from the LTE standard and designed for Machine to Machine (M2M) communications (e.g., IoT). eMTC is a simplified version of LTE that aims to draw less battery power and to extend its range. In contrast to classic LTE, eMTC reduces the data rate to a tenth of LTE (up to 1 Mbps) and strips down the bandwidth from 20 MHz to 1.4 MHz. eMTC supports full-duplex and optional half-duplex operations to reduce consumed power.

Long Range (LoRa)

LoRa is a proprietary technology from Semtech. Based on Chirp Spread Spectrum (CSS) modulation, it can use several bands of the ISM sub-GHz spectrum depending on the geographical location. LoRa communications are reasonably resilient to detection and jamming and are immune to Doppler deviation. LoRa offers several parameters that can be modified (e.g., spreading factor) to adjust the trade-off between range and data rate (from 0.3 to 50 kbps). LoRa is the technology of the physical layer LoRaWAN, supported by the LoRa Alliance, and is an open protocol for the MAC and network layers.

Sigfox (proprietary end-to-end solution for IoT connectivity)

Sigfox positions itself as an alternative network operator and deploys base stations around the world. This technology uses Binary Phase Shift Keying (BPSK) modulation over an Ultra-Narrow-Band (UNB) carrier of the sub-GHz ISM bands. UNB greatly reduces noise levels, which extends the communication range. The counterpart is a very slow data rate of 100 bps. To respect the duty cycle regulation imposed on the sub-GHz bands, Sigfox limits up-link communications to 140 transmissions of 12 bytes payload, and down-link to 4 transmissions of 8 bytes payload, per day and per device.

Cost factors

Telcom versus other low-power, long-range technology costs to connect [in USD]

| Technology | one module | connectivity | infrastructure |

| LTE-M | 10-15 | 3-5 / Month for 1 Mb | |

| NB-IOT | 7-12 | <1 / Monthfor 100 kb | |

| Sigfox | 5-10 | <1 / Month | |

| LoRa WAN Public | 9-12 | 1-2 / Month | |

| LoRa WAN Private | 9-12 | 0.25 / Month | 500 |

- Short range radio technology

This refers to transporting data, status and information from close (room- or house distance) sensors, access-points and devices to the decision-making application for storage in the data backend

Overview short range tech

WLAN/Wi-Fi

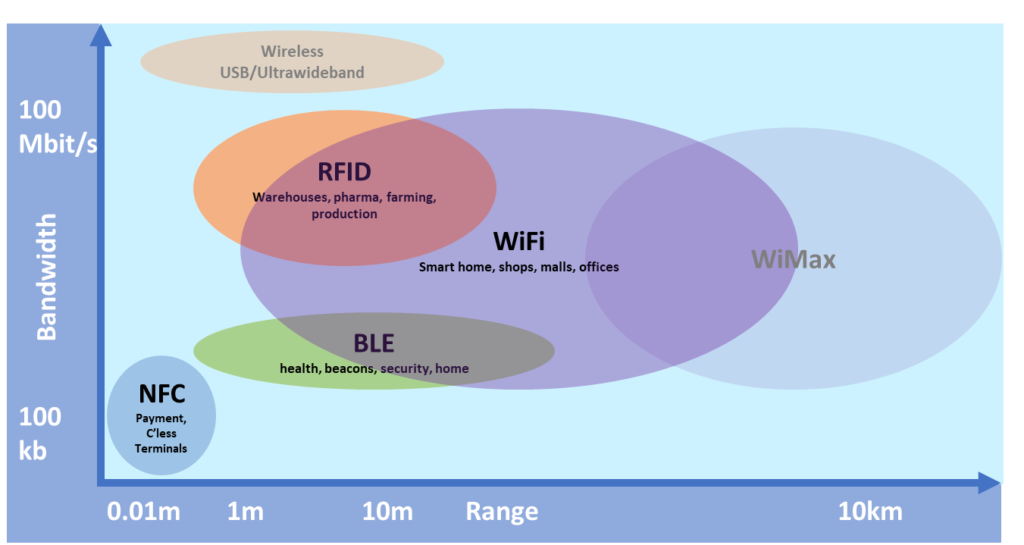

Wi-Fi is a family of wireless network protocols, based on the IEEE 802.11 family of standards. Wi‑Fi is a trademark of the non-profit Wi-Fi Alliance. Wi-Fi technology may be used to provide local network and Internet access to devices that are within Wi-Fi range of one or more routers that are connected to the Internet. Exceptional for long range the HaLow extends Wi-Fi into the 900-MHz band, enabling the low-power connectivity necessary for applications, including sensors and wearables. Because this frequency is freely available for basic communications, HaLow is also a standard for IoT.

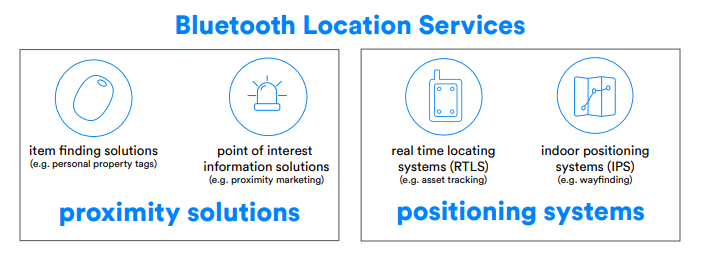

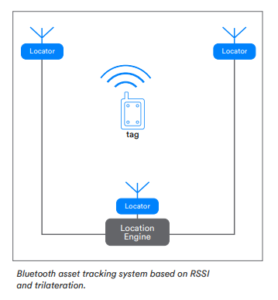

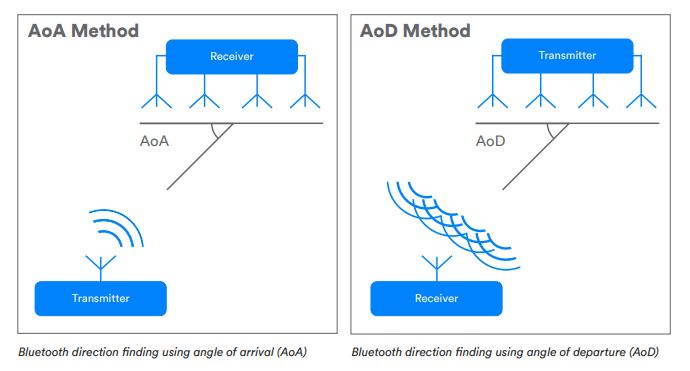

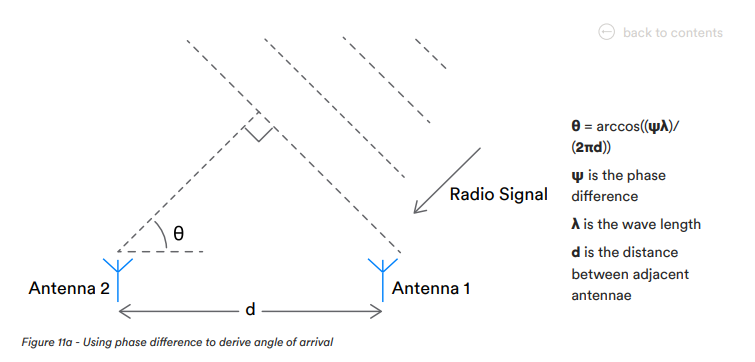

Bluetooth / BLE

Bluetooth is a wireless technology standard used for exchanging data between fixed and mobile devices over short distances using short-wavelength UHF radio waves in the industrial, scientific, and medical radio bands, from 2.402 GHz to 2.480 GHz, and for building personal area networks (PANs). Bluetooth Low Energy (Bluetooth LE) is a wireless personal area network technology designed and marketed by the Bluetooth Special Interest Group (Bluetooth SIG) aimed at novel applications in the healthcare, fitness, beacons, security, and home entertainment industries.

NFC

Near-field communication (NFC) is a set of communication protocols for communication between two electronic devices over a distance of 4 cm (11⁄2 in) or less. NFC offers a low-speed connection with simple setup that can be used to bootstrap more capable wireless connections.

NFC devices can act as electronic identity documents and key cards. They are used in contactless payment systems and allow mobile payment replacing or supplementing systems such as credit cards and electronic ticket smart cards. NFC can be used for sharing small files (e.g., contacts) and bootstrapping fast connections to share larger media such as photos, videos, etc.

RFID

Radio-frequency identification (RFID) uses electromagnetic fields to automatically identify and track tags attached to objects. An RFID tag contains a tiny radio transponder (a combination of radio receiver and transmitter). When triggered by an electromagnetic interrogation pulse from a nearby RFID reader device, the tag transmits digital data, usually an identifying inventory number, back to the reader. This number can be used to inventory goods. RFID tags are used in many industries. For example, automobile companies often use it to track progress through the assembly line, pharmaceutical companies often use it to track inventory through their warehouses, and farmers and pet owners are increasingly implanting RFID microchips to track and identify livestock and pets.

- IoT mesh technology and solutions

IoT mesh technologies transport the data, status and information from close (room- or house distance) sensors, industrial areas, and closer rural areas via access points and devices to the decision-making application for storage in the data backend. This is completed without any network planning or any other physical or technical construction works related to connectivity.

Simply place and play: the radio network configures itself and is prepared to handle many nodes and comprehensive infrastructure. The reliability, performance, safety and security features for this solution have been greatly improved in the last decade.

Wirepas Mesh solution (for massive IoT, reliable and cost-efficient IoT solutions)

Wirepas Mesh is a wireless connectivity technology for massive IoT. Wirepas Mesh running in the devices enables a scalable, reliable, and cost-efficient IoT solution. The network provides one horizontal connectivity layer for all IoT use cases: collect data from your sensors to an IoT application in the cloud, control remotely located devices, communicate device-to-device in the network with or without cloud and track the location of moving assets. All the networking intelligence is included in the Wirepas Mesh software to form a resilient large-scale wireless mesh network. The relevant radio standard is compliant IEEE 802.15.1, which is suitable with Zigbee, Thread, and other similar protocols. Currently supported are off shelf SoC Nordic nrF52832/33/40 and Silabs EFR 32 FG12/13.

Zigbee®

Robust, low-power mesh networks for smart homes and buildings

Zigbee is a standards-based wireless mesh network used widely in building automation, lighting, smart city, medical, and asset tracking. We have been a promoting member of the Zigbee Alliance for more than 10 years, providing robust stack delivery with the latest standards. Our Zigbee portfolio offers the lowest power mesh solutions enabling multi-year coin cell use or battery-less operation across industrial temperatures.

The technology defined by the Zigbee specification is intended to be simpler and less expensive than other wireless personal area networks (WPANs), such as Bluetooth and more general wireless networking such as Wi-Fi. Applications include wireless light switches, home energy monitors, traffic management systems, and other consumer and industrial equipment that requires short-range low-rate wireless data transfer.

Its low power consumption limits transmission distances to 10–100 meters line-of-sight, depending on power output and environmental characteristics.[2] Zigbee devices can transmit data over long distances by passing data through a mesh network of intermediate devices to reach more distant ones. Zigbee is typically used in low data rate applications that require long battery life and secure networking. Zigbee networks are secured by 128-bit symmetric encryption keys. Zigbee has a defined rate of 250 kbit/s, best suited for intermittent data transmissions from a sensor or input device.